Big Data, Little Drones: How UAVs are Changing the World of AI

Artificial intelligence and its incredible potential is a red-hot topic right now, and for good reason. It’s not just for Google search algorithms, Tesla’s self-driving cars, or creepy autonomous robots. AI is starting to catch fire in the drone industry as well. But how is AI applicable to the drone industry?

To answer that question, it's necessary to think about “big data”: the concept of collecting and organizing of all kinds of data for the purpose of extracting intelligence from it. Google is a great example: through providing free email and web search service, they collected a vast amount of anonymized user data, categorized it, stored it, and developed a way to sift through it for better search results and targeted advertising. It’s now the literal king of search and internet ad placement and getting paid billions for it. But Google needed to figure out how to sort through all of that “big data” and learn valuable insights that wouldn’t have been possible using manual human intervention.

Google created algorithms to look for patterns and started to figure out trends in user behavior. The algorithms got more complex, and the web of insights grew. In a nutshell, this is “machine learning”: telling a computer to match bits of information to one another to spit out a result.

Artificial intelligence stands on the powerful shoulders of machine learning. The advancements of machine learning help make AI better. It starts with manual input: give a computer a million images of cats, and the computer uses its infinite resources to break down each image, pixel by pixel, comparing it to the others, to notice patterns all by itself, much like a human infant, and slowly the computer learns what cats look like.

So how do drones come into all of this?

A Wealth of Information

The Skycatch AI program started a year and a half ago when we teamed up with NVIDIA and Komatsu to develop what was called the Discover1x project. The idea on its surface was simple: begin to train AI that would be able to identify and predict patterns on the job sites where our drones fly over. Specifically, identify inefficiencies and errors faster than any human could, and even autonomously correct itself in real-time: a sentient, self-healing job site.

In reality, training AI is anything but simple. It’s a time consuming and incredibly difficult process. Like the cat example above, it starts with collecting millions of images. Pictures of bulldozers, excavators, cranes, trucks, humans, stockpiles, materials, and on and on. Then, we have to start with an infant AI brain, and begin the training process: this is a crane, this is a stockpile, this is a human. It takes an incredible amount of time before the first inkling of “intelligence” begins to form.

However, our time spent so far has been paying off.

The Infant AI

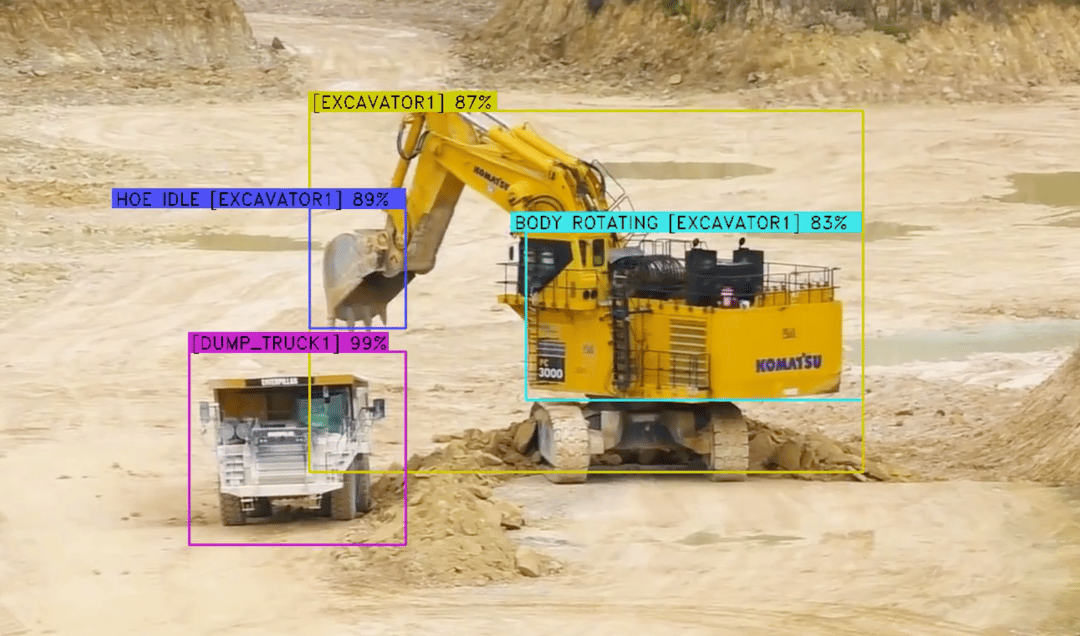

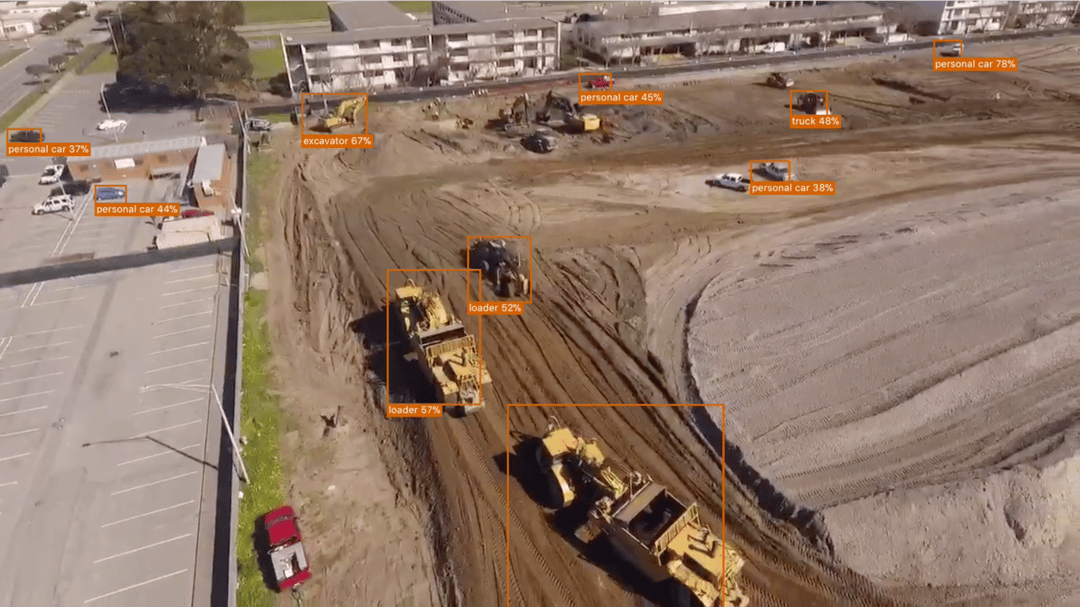

Real-time labeling of equipment on an active project

Real-time labeling of equipment on an active projectOur young AI brain, stored in our Discover1x and running off of NVIDIA’s TX2, has been able to consistently identify the kind of heavy machinery seen in the aerial and ground-based video, shot from our Explore1 drone. Not only can it identify machinery, but it knows which type of machinery it is.

And while identifying is great, we’re not simply interested in that. Our end goal is a fully autonomous job site. So what we had to teach our AI brain next was to identify the individual parts of the equipment. Our Data Scientists went to work on training our AI to identify individual parts of equipment, for instance, an excavator: the wheels, the cabin, the arm, and the excavating shovel.

Next, our AI brain starts to evolve a deeper understanding, as we begin to label actions, such as digging, dumping, moving, and so on. The system can now look at a sequence of actions and understand what the machine is doing.

Pushing our infant AI brain further, our next step was to bring in a distinctly human concept: contextualizing. It brings together all of the individual aspects of what we’ve done before, and setting them to a clock. How many times can an excavator rotate its body in 12 hours? How many times can an excavator's arm move up and down? How many times can the excavator shovel dirt from the earth?

This is where Skycatch is now. It is in this latest phase of many, with years more to go. But as we expand operations, and continue to collect more “big drone data”, our young AI brain will develop exponentially.

Big Data, Small Sensors

We’re already working with Komatsu in deploying our Discover1x units across many job sites. They are capable of ingesting data of all sorts - video, images, sound, and others. Eventually, any customer will be able to develop on our Discover platform and begin training their own AI, increasing the collective consciousness.

As technology becomes more advanced, the variety and complexity of powerful sensors will shrink, become more adaptable, and less expensive. In 5 years or less, LiDAR sensors will be small enough and affordable enough to attach to nearly anything and not affect weight or form factor. The RTK and edge computing technology in our Edge1 device will become small enough to place onto any device, providing near real-time AI processing. The applications for this technology are endless.

We dream of a day in which humans work side by side, monitoring and conducting massive construction projects that can run completely autonomously. These sites will let the humans do the greater decision making while keeping them safer and completing jobs faster than ever before.

Until then, we’ll continue to develop ways in which our high accuracy drone data can benefit our customers in the present, while we work tirelessly with our partners to accelerate progress worldwide.

- David Chen, Head of Research and Development